Data center interconnection applications have become an important and rapidly growing part of the network. This article will explore several reasons for this growth, including market changes, network architecture changes, and technology changes.

The huge growth of data has promoted the construction of data center parks, especially the construction of very large data centers. Now, several buildings in a campus must be connected with sufficient bandwidth. How much bandwidth is needed to maintain the flow of information between data centers within a single campus? Each data center can transmit to other data centers with a capacity of up to 200 Tbps today, and will need higher bandwidth in the future

What drives such a huge bandwidth demand between campus buildings?

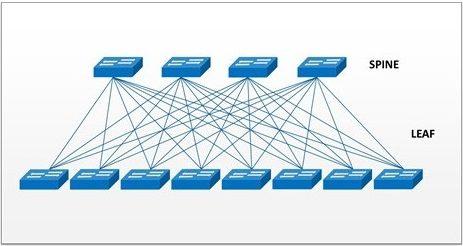

First, the exponential growth of east-west traffic is supported by device-to-device communications. The second trend is related to the use of flatter network architectures, such as spine or CLOS networks. The goal is to have a large network structure in the campus, so a large number of connections need to be established between the devices.

Traditionally, data centers are built on a three-layer topology consisting of core switches, aggregation switches, and access switches. Although mature and widely deployed, the traditional three-tier architecture no longer meets the increasing workload and latency requirements of hyperscale data center campus environments. In response, today's very large data centers are migrating to a spine and leaf architecture (see Figure 2). In the spine and leaf architecture, the network is divided into two phases. The ridge phase is used to aggregate packets and route them to their final destination, and the leaf phase is used to connect the host-side and load-balanced connections.

Ideally, each leaf switch will fan out to each spine switch to maximize the connection between the servers, so the network requires a high-level spine core switch. In many environments, large spine switches are connected to higher-level spine switches, often referred to as campus or converged ion spine switches, to connect all the buildings in the campus together. Because of this flatter network structure and the use of high-end switches, we expect to see the network become larger, more modular, and more scalable.

How to provide the best connection in the most cost-effective way?

The industry has evaluated multiple methods, but the common pattern is to transmit at a lower rate over a large number of fibers. To reach 200 Tbps using this method, each data center interconnect requires more than 3,000 fibers. When you consider the fiber needed to connect each data center to each data center in a single campus, the density can easily exceed 10,000-core fiber!

A common question is when to use DWDM (Dense Wavelength Division Multiplexing Transceivers) or other technologies to increase the throughput per fiber instead of increasing the number of fibers? At present, data center interconnection applications up to 10 kilometers usually use CWDM (Coarse Wavelength Division Multiplexing) 1310nm transceivers, which do not match the 1550nm transmission wavelength of DWDM systems. Therefore, high-core-count fiber optic cables are used between data centers to support large-scale interconnections.

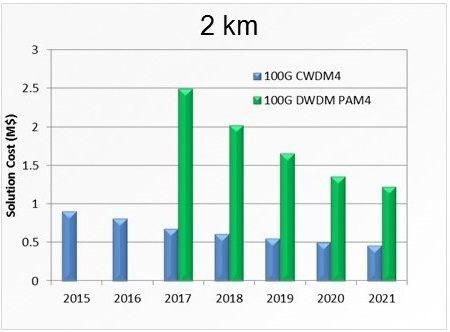

The next question is when will a 1310nm transceiver be replaced with a pluggable DWDM transceiver in an access switch by adding a multiplexer unit? The answer is when or if DWDM becomes a cost-effective method for interconnecting data centers in the campus.

To estimate the feasibility of this conversion, we need to look at the price of the DWDM transceiver and compare it with existing transceivers. Based on the price modeling of the entire link, the current forecast is that in the foreseeable future, connections based on fiber-rich 1310nm architecture will continue to occupy low cost advantages (see Figure 3). The PSM4 (8-core fiber) alternative has been proven to be cost-effective for applications smaller than 2 kilometers, which is another factor in increasing the number of fibers.

How will the future of extreme density networks evolve?

The most important factor at the moment is whether the number of fibers will stop at 3456 cores, or if we see these numbers will be higher. The current market trend indicates that the number of cores required is even more than 5000 cores. In order to maintain the scale of the infrastructure, the pressure to reduce the size of fiber optic cables will increase. As fiber packaging density has approached its physical limits, the choice to further reduce the diameter of the fiber optic cable in a meaningful way has become more challenging.

One increasingly popular method is to use a fiber with a coating size reduced from the usual 250um to 200um. The core and cladding dimensions remain the same, so there is no change in optical performance. However, this reduction in size can greatly reduce the total cross-sectional area of the cable when it extends into hundreds to thousands of cores of fiber. This technology has been applied in some cable designs and is used by manufacturers for miniature loose tube cables.

Another important issue is how to best provide data center interconnects to connect locations that are farther away and not configured in the same physical campus. In a typical data center campus environment, the typical data center interconnect length does not exceed 2 kilometers. These relatively short distances enable a fiber optic cable to provide connectivity without any fusion points. However, as data centers are also being deployed around big cities to reduce latency, distances are increasing and can approach 75 kilometers. The use of extreme-density cable designs in these applications reduces budgets because it is more expensive to connect a large number of fibers over long distances. In these cases, more traditional DWDM systems will continue to be preferred, running at 40G and higher speeds using fewer fibers.

We can expect that as network owners prepare for the upcoming launch of fiber-intensive 5G products, demand for extreme-density fiber optic cables will migrate from the data center environment to the access market. Without overwhelming existing pipelines and the building's internal environment, developing products that can effectively scale to the required number of optical fibers will continue to be industry challenges.